Function DimensionStorage.addHierarchy has been incorrectly called.

Update has been canceled because of an error.

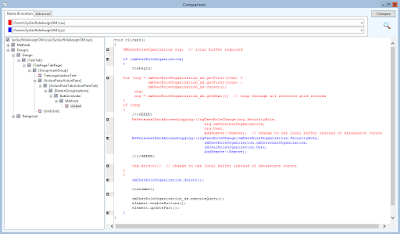

The error occurs at the following line in DimensionStorage\addHierarchy().

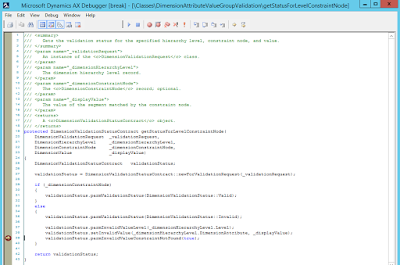

The root of the problem is data corruption in the GeneralJournalAccountEntry (GJAE) table. Specifically, in some rare cases the LedgerDimension field refers to a DimensionAttributeValueCombination (DAVC) record that does not have an AccountStructure. This appears to happen when a Default account is used in the UX rather than an appropriate LedgerDimensionType of Account.

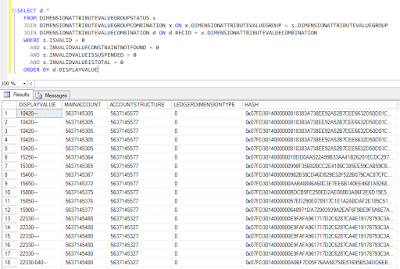

For example, these GeneralJournalAccountEntry records refer to LedgerDimension 5637145331 (specific to this environment of course).

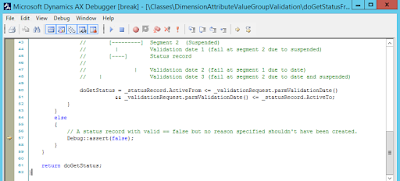

That DimensionAttributeValueCombination record refers to a Main account, but the AccountStructure field is 0 and the LedgerDimensionType field is 1, i.e. LedgerDimensionType::DefaultAccount.

That absence of an AccountStructure is the root cause of the original error, and in fact this DAVC record with a LedgerDimensionType of 1 should never be referenced from the ledger at all.

Is it possible to locate all such records with the following SQL.

Note that whether coincidence or not, in every case of this I've detected the PostingType field on the GJAE record is 45, or LedgerPostingType::VendCashDisc. I strongly suspect that KB3080798 (details at the bottom of this post) is the fix for this issue, though I have not yet tested it to confirm.

The repair of this data corruption is beyond the scope of this post. In theory, the replacement of the defective LedgerDimension with the LedgerDimension of an equivalent but proper DAVC record is recommended, of course without changing the Main account to avoid impacting ledger balances.

KB 3080798

Vendor cash discounts posting without a financial dimension regardless of account structure limits

Product and version

Microsoft Dynamics AX 2012 R3

Fix type: Application hotfix

PROBLEM

You can post vendor cash discounts without a financial dimension even though you have an account structure specifically set up to prevent data entry errors.

DESCRIPTION OF CHANGE

The changes in the hotfix only address the defaulting order and still do not do the validation, and will not do the validation.